Prelude

Today I found out my AWS educate account was 1-month away from expiring, and that I haven’t used nearly any of the $30 I was granted, so, like the good student I am, I went on to (ab)use my tiny little EC2 instance by installing nix on it and proceeding to pull in every compiler I can think of under the sun.

My idea was, every benchmark out there only focuses on speed or less often memory usage, but rarely if ever executable size, and I get that. If you think about it, this follows closely the price of the hardware units respective to each of those metrics; Harddrives are the cheapest, while processors are the most expensive.

Regardless, I still hold a firm belief that putting code size -as a language implementor- on your list of due optimizations is important. Storage may be practically free to the fortunate, but would make the less fortunate more reluctant to use programs built with your toolchain; Executable sizes add up (imagine an operating system with full-Go or D userland—oh but I won’t spoil it for you), and bandwidth can be costly. I’d rather the operating system takes as little space as practically possible to save the rest for pet pics and a “I swear I will read this… eventually” research paper collection.

Additionally, compilers that produce bigger executables need to think harder about how they’d fit the hot code in cache, while smaller executables may fit entirely in it, and cache is the name of the game these days. It’s expensive and makes a lot of difference in preformance.

I thought it’s a good exercise to look at which of the wide selection of “systems” languages we have now make for a good tool under harsh code size constraints, without much effort. So, my game was quite simple: A hello world program, compiled with size optimizations—if available, stripped, wc’d, objdump’d, sized, nm’d, ldd’d, ran and straced. There are limitations of course:

- If the language includes a piece of runtime by default, but allows for its removal via a compiler flag, that runtime is kept. Because this isn’t a how-small-can-it-go test, it’s a how-small-can-it-go-without-effort test.

- If the language can’t fully statically link, any SO it links to is wc’d and counted entirely in its “executable size”.

- The language can’t be interpreted or compiled to bytecode. If I were to add those, I’ll have to add the runtime as part of the “executable size”. Maybe I will try that in the future just for contrast.

- If the language exposes an interface to interact with an underlying C toolchain for codegen, the size-optimization options passed to plain C compilers are also passed via that interface.

- No linker flags (via

-Wl,...etc).

I’ve only come across one blog post which tried something like this.. The competition was so weak though: only C, C++, Go, Rust, and SBCL/CCL Lisp. My dick list is way bigger.

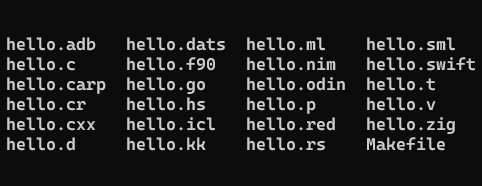

So which compilers did I pick?

You could ask which I didn’t:

- Carp

- Clang++

- Clang

- CLM (Clean)

- Crystal

- DMD (D)

- FPC (Free Pascal)

- G++

- GCC

- GDC (D)

- GFortran

- GHC (Haskell)

- GNAT (Ada)

- Go

- Koka

- LDC (D)

- MLton (StandardML)

- Nim

- OCamlopt

- Odin

- PATSopt (ATS2)

- Rustc

- Swift

- TCC

- Terra

- V

- Zig

Some of these compilers are still in research1, but they’re here for novelty’s sake anyway. You can see the Makefile for running each command of those here

Now for the results

The wc -c test

First we do a preliminary size measurement of executables. The aim is to see how small they are before taking into consideration linkage. This is just the first impression and doesn’t mean much for now.

Unstripped:

| size (bytes) | compiler |

|---|---|

| 2294152 | gdc |

| 1945687 | go |

| 1022056 | ldmd2 |

| 1014944 | ghc |

| 988784 | dmd |

| 686424 | carp |

| 413392 | ocamlopt |

| 399752 | gnat |

| 351464 | rustc |

| 293360 | crystal |

| 220488 | mlton |

| 190784 | fpc |

| 189568 | odin |

| 132384 | v-transpiled |

| 132384 | v-native |

| 114552 | clm-gcmark |

| 114552 | clm-gccopy |

| 114552 | clm |

| 110128 | nim |

| 75952 | zig |

| 56232 | patsopt |

| 54960 | g++ |

| 54856 | gfortran |

| 54488 | terra |

| 54464 | gcc |

| 15344 | swiftc |

| 8888 | clang++ |

| 8192 | clang |

| 3068 | tcc |

Stripped:

| size (bytes) | compiler |

|---|---|

| 1363640 | go |

| 1349408 | gdc |

| 764512 | ghc |

| 671392 | dmd |

| 669584 | ldmd2 |

| 302784 | ocamlopt |

| 268616 | rustc |

| 247104 | gnat |

| 224160 | crystal |

| 190784 | fpc |

| 139944 | mlton |

| 137824 | odin |

| 121808 | carp |

| 114552 | clm-gcmark |

| 114552 | clm-gccopy |

| 114552 | clm |

| 88264 | v-transpiled |

| 88264 | v-native |

| 55544 | nim |

| 14592 | patsopt |

| 14440 | gfortran |

| 14440 | g++ |

| 14408 | terra |

| 14408 | gcc |

| 11936 | swiftc |

| 6264 | clang++ |

| 6208 | clang |

| 4496 | zig |

| 3064 | tcc |

If we were to order these languages into two categories; has substantial runtime vs has minimal runtime, the impressive ones would immediately shine through:

- Zig: While competing with Rust, Ada, Fortran, etc.. as a viable -safer- C or C++ alternative, Zig is surprisingly much leaner.. And thus a lot more attractive for kernel dev and/or embedded systems.

- Clean: Although the language is functional, the type system is advanced, it’s very close to Haskell, with a high ceiling of abstractions, and has a garbage collector (and thus falls under the “substantial runtime” category), Clean’s overhead is surprisingly miniscule. It even beats the more brutalist MLton.. And if its runtime benchmarks show anything, this language is extremely underappreciated in FP circles.

- Swift: Refcounted languages are a gray-area category as their runtimes are way simpler2 than GC’d ones. Swift takes the cake in this category. It’s a pleasant language to work with, too, at least from what I heard. There are disappointments in those two tables, and the numbers speak for themselves, but before we get too hasty and put medals on the apparent winners here, let’s look at where they get their code from…

Linkage

The question of whether a system should rely on static or dynamic linkage has been a cause for many flamewars through our computing history. The general trajectory seems to be, these days, in preference of static linkage. It is a question worth exploring, though, given the premise of my initial paragraph in this blog post..

If we’re thinking exclusively in terms of a fully-shared system, the size of a shared object can be imagined as if it was divided across all executables.. Which may yield leaner systems after a certain threshold. But if we follow this argument to its end, the most efficient system would then be one that has a common language runtime like the JVM or mono, and only the compact bytecode executables. Such system was -of course- implemented many times. Suffice to say that most dumb phones ran a stripped down Java runtime back in mid 2000s. And those were quite constrained in storage and memory.. Actually in everything.

However, we are not following this argument to its logical end, and instead, we’re adamantly pushing our fingers in our ears and yelling as we count every single shared object as part of our executable. Think of it as a worst-case scenario or something—I don’t know.

Now for the measurements.. First we look at all the libraries these executables link to, as a reference to their individual sizes. They’re sorted, but also grouped by relation to each other.

| shared object | size (bytes) |

|---|---|

| linux-vdso | embedded in linux kernel |

| libicudataswift | 27980992 |

| libswiftCore | 7939696 |

| libicui18nswift | 4109960 |

| libicuucswift | 2447952 |

| libgfortran | 2689664 |

| libquadmath | 292720 |

| libc | 2071776 |

| libstdc++ | 1903088 |

| libgcc_s | 112336 |

| libm | 1413704 |

| libgmp | 705008 |

| libpcre | 502136 |

| libevent-2 | 411072 |

| libgc | 231744 |

| libpthread | 140144 |

| libz | 120648 |

| librt | 49736 |

| libffi | 54432 |

| ld-linux-x86-64 | 223776 |

| libdl | 18544 |

Here’s a table of all the tested languages again, this time with linkage taken into account, libraries are summed, but a table of their names, cleaned up, is also provided here. Note that since all of them link to linux-vdso (except static ones), the results are normalized to it. i.e. it isn’t counted. Note also that some language gc/backend variants are removed because they’re bit-for-bit the same.

| language | number of libs | total size (bytes) |

|---|---|---|

| swiftc | 11 | 48405096 |

| gfortran | 6 | 6818416 |

| g++ | 5 | 5739120 |

| clang++ | 5 | 5730944 |

| gdc | 7 | 5481528 |

| ghc | 8 | 5472824 |

| crystal | 10 | 5430320 |

| dmd | 7 | 4732600 |

| ldmd2 | 7 | 4730792 |

| mlton | 4 | 4554208 |

| ocamlopt | 4 | 4061776 |

| odin | 3 | 3847080 |

| carp | 3 | 3831064 |

| clm | 3 | 3823808 |

| rustc | 5 | 2866384 |

| gnat | 3 | 2592392 |

| nim | 3 | 2400832 |

| v-native | 2 | 2383816 |

| patsopt | 2 | 2310144 |

| terra | 2 | 2309960 |

| gcc | 2 | 2309960 |

| clang | 2 | 2301760 |

| tcc | 2 | 2298616 |

| go | fully-static ⭐ | 1363640 |

| fpc | fully-static ⭐ | 190784 |

| zig | fully-static ⭐ | 4496 |

Swift, which was shining so brightly just a little while ago, is now doing the very worst. I kind of understand why, though; Swift was designed from the beginning to run on Apple ecosystems – where those shared libraries would exist anyway and be maintained often. Choosing to keep the executable size itself to a minimum and offloading everything to huge central shared libraries isn’t so far off from having a (dalvik) VM running all the time on the Android side. Nevertheless, Nim rightfully takes Swift’s place as the leaner refcounted language.

It would be nice if someone had a hosted musl vm and also tried these. Some of the languages in this comparison do have better sizes when they’re allowed to be fully-static. e.g. from personal experience, OCaml executables can go lower than 4MB on a musl backend (They’re like 1.5 MB).

What’s perhaps interesting in those tables is that out of all the executables here, only three are fully-static: the most bloated, Go executable, the moderately sized good ol’ Free Pascal one, and what seems to be the dark horse of this competition: Zig.

Zig executables manage to be both static, and leaner than their counterpart dynamic gcc executables (as well as static musl ones; I just compiled a hello world via musl-gcc using the same makefile options plus --static, and it’s 83336B unstripped, 13528 stripped). Zig’s not only smoking the competition for lower level systems languages—it seems to also want a piece of devops-friendly low-memory fast-compiling mid-level ones like V and Go! My hat is off to you, Andrew Kelly.

Future work

I’d like to explore -in future blog posts- the reasons why the larger executables are so large. I’d like to also try compiling a nontrivial program -written in a manner idiomatic to each language- to see how these languages behave with anything bigger than hello world, and how much cost they incur on organized modular code.

I got tired and didn’t dissect the executables with more binutils, that’s one for the future.

By the way, the hello world implementations are provided here, compiler versions here, and executables are uploaded [here][Execs].

Fun fact, Koka didn’t even compile due to reaching OOM. I believe it’s a GHC memory leaking bug and the my tiny memory capacity of my AWS instance.

Also typing (( then hitting enter, then typing )) in the Carp REPL caused it to crash. I discovered a compiler bug without even trying to be subversive! ↩

Koka on the other hand is a disaster; its hello-world executables are well over an obscene 3MB! For its release executables! With strip the size is cut dramatically to 400KB, which makes me think there might be something missing in the way the compiler handles release builds. I love that language, the research coming out of it is so exciting, but 400Kilobytes for a refcounted language that gets compared often to C and C++, by no means is turning heads.. beyond its innovative refcounter and effects system of course. ↩

Comments

No comments yet. Be the first to react!